Best Practices for Process Development Data Management

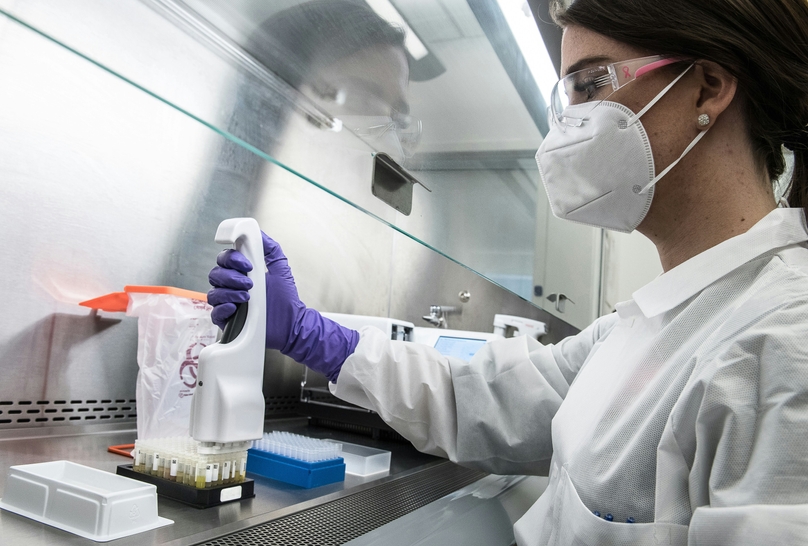

Process development generates some of the most complex, high-stakes data in biotech. Here's a practical framework for keeping it connected, traceable, and audit-ready—at every stage of growth.

Why process development data is uniquely hard to manage

Most lab data is complex. Process development data is in a category of its own.

A single process development campaign might generate dozens of experimental runs, hundreds of parameter variations, material lots from multiple vendors, in-process analytics, and final characterization data—all tied together by a lineage that must remain intact months or years later.

When that lineage breaks—when a run's buffer lot can't be traced, a parameter deviation isn't documented, or upstream results can't be found—the consequences compound: repeated experiments, delayed timelines, and failed regulatory submissions.

The core problem: Process development data isn't just voluminous. It's interdependent. Every run connects to materials, methods, operators, equipment, and results. Managing it well means managing all of those connections—not just the data points themselves.

A practical framework: five layers of process dev data management

Regardless of your organization's size—early-stage lab, scaling biotech, or CRO—strong process development data management operates across five interconnected layers.

Best practice 1: Standardize before you scale

The most expensive data management problems in process development aren't caused by bad tools. They're caused by inconsistent conventions adopted before any tool was in place.

Teams that start with informal notebooks and shared folders often find that by the time they reach clinical or GMP-adjacent work, their historical data is essentially non-queryable. Reconstruction becomes a project in itself.

What to standardize early

- Run naming conventions: process stage, scale indicator, date, and sequential ID at minimum

- Parameter fields: agree on which parameters are always recorded and in what unit/format

- Material metadata: lot, vendor, CoA reference, storage condition, and expiry for every input

- Outcome fields: define what "success" data looks like and capture it consistently

- Deviation format: what counts as a deviation, how it's logged, and who reviews it

Rule of thumb: If two scientists run the same type of experiment and their records look structurally different, you have a standardization gap. Fix it before it multiplies.

Best practice 2: Connect materials to experiments—at the record level

Traceability in process development isn't just a regulatory requirement. It's operationally essential. When a batch fails or a result is unexpected, the first question is always: what went into this run?

The problem with spreadsheet-based tracking is that materials and experiments live in separate files. The connection between them exists only in a scientist's head—or a folder name—and erodes rapidly with team turnover or time.

What connected traceability looks like in practice

- Each experimental run references specific material lots—not just material names

- Lot records include QC status, expiry, and location at time of use

- Equipment used (bioreactor ID, column serial, etc.) is part of the run record

- Operator is logged automatically, not filled in manually after the fact

- Any substitutions or deviations are flagged within the same record, not in a separate log

Best practice 3: Design experiment templates for your process stages

Templates are one of the highest-leverage tools in process development data management. A well-designed template enforces structure, reduces transcription error, and makes cross-run queries trivial.

The key is designing templates at the process stage level—upstream, downstream, formulation, analytical—rather than at the generic "experiment" level. Each stage has different required fields, different parameter sets, and different output formats.

Upstream (cell culture / fermentation)

Seed train, inoculation density, media lot, feed strategy, DO/pH/temp profiles, harvest criteria, viability at harvest

Downstream (purification)

Column ID, resin lot, load conditions, wash/elution buffers, step yield, pool purity, HCP/DNA/aggregates

Formulation

Excipient lots, target concentration, fill volume, container closure, stability time points, visual inspection

Analytical

Assay ID, instrument ID, reference standard lot, run date, acceptance criteria, raw data file reference

Templates enforce the metadata you need without requiring scientists to remember what to capture. They also make onboarding faster—a new team member using a structured template produces comparable data from day one.

Best practice 4: Treat deviations as first-class data

Deviations are not exceptions to document reluctantly. They are data—often the most informative data in a process development campaign.

A deviation that's captured in real time, linked to the affected run, and reviewed with appropriate context is a learning. A deviation reconstructed from memory six weeks later is a liability.

Minimum viable deviation management

- Log deviations within the run record, not in a separate system

- Capture: what was planned, what actually occurred, and why

- Flag impact: did this affect the output, and was it assessed?

- Link to corrective action or protocol update if applicable

- Make deviation history queryable across runs, not buried in individual notebooks

Best practice 5: Build for cross-run analysis from the start

The purpose of process development is optimization. Optimization requires comparison. And comparison requires that runs are structured the same way—with the same fields, the same units, and the same metadata conventions—from the beginning.

This is where most ad hoc systems fail. If run 1 through 15 were documented informally and runs 16 onward use a structured template, the historical data becomes difficult or impossible to include in systematic analysis.

What cross-run analysis requires from your data system

- Consistent field names and data types across all runs of the same process stage

- Queryable metadata (filter by scale, operator, equipment, date range, parameter value)

- Results linked to inputs—so you can ask "what did every run where pH exceeded 7.2 yield?"

- Version control on protocols—so you know which version each run executed against

Worth noting: Genemod's structured ELN and LIMS layer are designed specifically for this—enabling teams to query across experiments and connect results to samples, materials, and process parameters without manual data assembly. Teams that implement this early avoid the reconstruction work that slows down most process development programs at scale.

How data management needs evolve across organizational types

| Organization Type | Primary Data Challenge | What to Prioritize |

|---|---|---|

| Early-stage biotech / new lab | Informal conventions adopted before systems are in place; hard to retrofit later | Naming standards, basic templates, material-to-experiment linkage from day one |

| Scaling biotech (Series A–C) | Growing team, parallel programs, increasing handoffs; data starts fragmenting across people and tools | Cross-run comparability, deviation tracking, permissions, audit trails, GMP readiness planning |

| CROs and CDMOs | Multiple client programs running simultaneously; data must be isolated, traceable, and deliverable | Program-level data segregation, structured reporting, client-ready traceability packages, access controls |

Tip: The earlier you implement structured data management, the lower the total cost—in time, rework, and regulatory risk. Every stage above has unique pressures, but the underlying data disciplines are the same.

Where Genemod fits: a connected foundation for process development data

Genemod is built for the operational reality of modern process development—where samples, experiments, materials, and results need to stay connected across a program's entire lifecycle.

What Genemod brings to process development teams

- Lifecycle-aware inventory: material lots, status, lineage, and storage location—linked directly to the experiments that consume them

- Structured ELN with templates: stage-specific experiment templates that enforce metadata consistency and make cross-run analysis possible without cleanup

- Connected files and results: raw data, analytical outputs, and reports stay attached to the exact run they came from—not scattered across shared drives

- Deviation and change tracking: in-context logging within the run record, with queryable history across experiments

- Audit-ready from the start: automated timestamps, operator logs, and change history—built in, not bolted on

- Scalable governance: role-based permissions and workflow controls that grow with your team and your regulatory requirements

Bottom line: Process development data management is not a documentation problem. It's an infrastructure problem. The labs and CROs that get it right don't just have better records—they move faster, repeat less work, and enter regulatory interactions with confidence.

Genemod is designed to be that infrastructure: lightweight enough to deploy early, robust enough to carry you through IND-enabling studies, GMP transition, and beyond.