Establishing a New Biotech Lab: A Practical Guide to Infrastructure, Data, and Scale

Starting a new lab is not just buying equipment. It’s designing an operating system—facilities, workflows, data structures, and governance—so the lab can scale without losing control.

Why most new labs struggle later—even if they start strong

New labs usually optimize for speed: get benches online, run the first experiments, generate early data. That’s rational. But what breaks later is rarely the science—it’s the operational layer around the science.

By the time a team hits 10–20 people, runs multiple programs, or adds a partner (CRO/CDMO), the lab often has a familiar set of symptoms: missing sample context, inconsistent naming, scattered files, and increasing time spent “reconstructing what happened.”

Rule of thumb: Build for today’s experiments—but design for tomorrow’s coordination. The cost of rebuilding infrastructure later is almost always higher than doing the basics right early.

A practical framework: Think in three layers

When establishing a lab, it helps to design three layers in parallel:

- Physical layer: facility layout, safety, equipment, storage, and access

- Workflow layer: how work moves—requests, handoffs, approvals, and roles

- Data layer: how identity, metadata, files, and results stay connected over time

If you only build the physical layer, your lab will run—but it won’t scale.

1) Facility and space planning (what matters most)

You don’t need a perfect facility to start. You need a facility that supports safe execution and predictable workflows.

Design for flow

Map how samples and people move. Separate “clean” and “dirty” zones. Minimize backtracking and bottlenecks.

Plan for growth

Leave room for additional incubators, freezers, and bench space. Expansion is easier when utilities and layout anticipate it.

Storage is infrastructure

Freezer strategy (capacity, redundancy, monitoring) often becomes a growth limiter faster than bench space.

Access control matters

Even early, decide how you handle security, visitor access, and restricted materials.

Early decisions that prevent chaos later

- Standardize labeling and barcode strategy from day one

- Define storage hierarchy (freezer → rack → box → position) consistently

- Decide how you will monitor critical equipment (alarms, logs, ownership)

- Establish a simple intake process for new materials and reagents

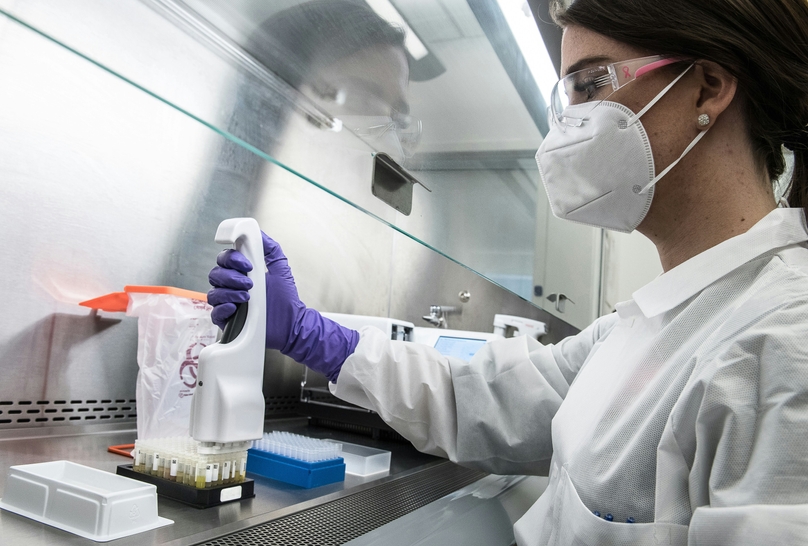

2) Core equipment: buy what you can operationalize

New labs often over-invest in instruments before they have processes to support them. The practical rule: prioritize what you can run reliably, maintain, and capture data from consistently.

Core categories most labs need early

- Cold storage: -20°C, -80°C, LN2 (as needed), plus monitoring and contingency

- Basic sample processing: centrifuges, hoods, pipetting infrastructure

- Cell culture (if relevant): incubators, biosafety cabinets, CO2 supply, QC routines

- Analytical basics: plate readers, qPCR, imaging (depending on modality)

- Shared utilities: water, gas lines, waste management, EHS compliance

What to avoid early

- Buying specialized instruments before workflows and data capture are defined

- Assuming “we’ll organize the data later” (you won’t, unless forced)

- Adding tools that require heavy IT integration without an owner

3) Sample management: build identity and lineage before volume explodes

Most labs don’t lose samples because they lack freezers. They lose samples because identity and lineage aren’t enforced consistently.

Minimum viable sample governance

- Define sample types (e.g., plasmid, cell line, lot, aliquot, patient-derived material)

- Adopt a consistent ID format (human readable + barcode-friendly)

- Standardize core metadata fields (source, owner, status, concentration, batch)

- Track lineage (parent/child, splits, derivatives) from the beginning

Key idea: Sample tracking is not a spreadsheet problem. It’s a lifecycle problem. If you don’t capture lineage and status, location alone won’t save you.

4) Data infrastructure: the part you’ll regret delaying

A lab’s “data infrastructure” is not just storage. It’s the way context is preserved so results remain interpretable months later—especially when people change or programs evolve.

What you want your data system to support

- Link samples to experiments and results automatically

- Keep files attached to the right context (not lost in shared drives)

- Standardize metadata so analysis doesn’t require cleanup every time

- Preserve audit trails and change history where needed

Where teams usually start (and what breaks)

- Shared drives for raw data, with inconsistent folder naming

- Spreadsheets for inventory, with duplicated IDs and manual reconciliation

- Email threads for approvals and handoffs

- Notebook entries without consistent structure or templates

5) Scaling strategy: what changes at 5, 15, and 30 people

Scaling isn’t only headcount. It’s the number of parallel workflows, the number of sample types, and the number of handoffs across teams.

| Lab Stage | What Changes | What to Standardize First |

|---|---|---|

| 0–5 people | Fast iteration, informal conventions, minimal governance | Sample IDs, basic inventory, simple experiment templates |

| 6–15 people | Parallel projects, more handoffs, onboarding becomes non-trivial | Metadata standards, ownership, request tracking, file organization |

| 16–30+ people | Multiple programs, cross-team dependencies, partner involvement | Permissions, audit trails, workflow stages, reporting and lineage |

Tip: If you wait until you “feel the pain,” you’ll implement systems during peak complexity—when adoption is hardest.

Where Genemod fits: a modern foundation for inventory, documentation, and scale

Genemod is designed for exactly this moment: when a new lab needs to move fast, but still build an operational backbone that won’t collapse under growth.

Start lightweight, then expand without re-implementing

- Sample management + inventory: lifecycle-aware tracking, lineage, storage structure, and ownership

- ELN built for execution: templates, structured metadata, and experiment organization that stays consistent

- Connected files: raw data and reports remain tied to the exact samples and experiments they came from

- Operational workflows: requests, approvals, and handoffs become visible and auditable

- Scalable governance: permissions and audit trails can be introduced as your lab matures

Bottom line: Genemod helps new labs avoid the “tool sprawl” trap—where inventory, ELN, files, and workflow tracking live in separate systems and require constant reconciliation.