Reimagining Lab Software at SLAS 2026: Why AI-Driven LIMS Is the Next Frontier

SLAS 2026 is increasingly about more than automation hardware. The conversation is shifting toward AI-driven lab management software that supports execution—not just documentation. If you’re attending, come see Genemod at Booth #1835.

The shift SLAS 2026 is making visible

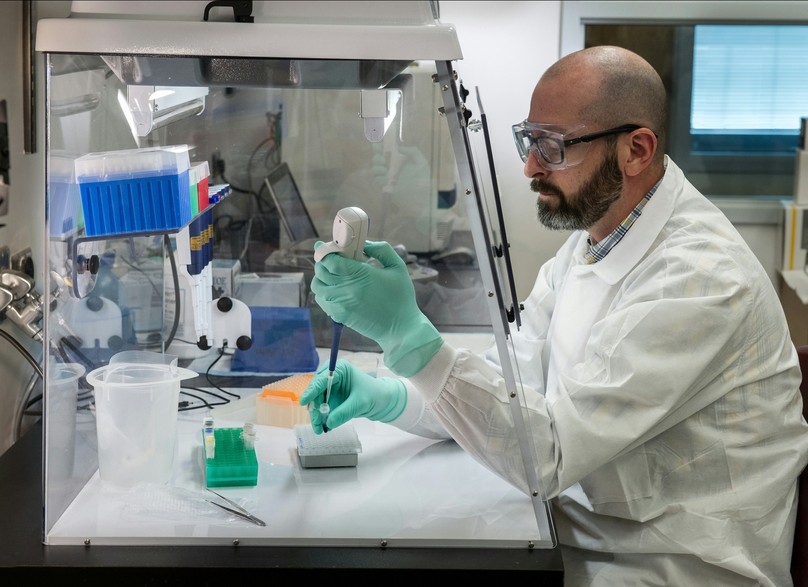

SLAS has always been a place to see what’s next in laboratory automation—from robotics to high-throughput workflows. But one theme is becoming more obvious every year: lab productivity doesn’t scale on hardware alone. It scales when the software layer can connect work, data, and decisions across teams.

That’s why SLAS 2026 is spotlighting a new category of buyer interest: AI-enabled LIMS and lab management platforms designed to reduce coordination overhead and increase confidence in data—not just store records after the fact.

Big idea: The next generation of lab software isn’t built to document what happened. It’s built to help labs run what happens next.

The AI LIMS era is here

For decades, LIMS was treated as a back-office system: a repository of records, audit logs, and compliance fields. ELNs reduced paper, but often stayed disconnected from execution—leaving labs with two systems that captured information, but didn’t necessarily drive better outcomes.

In 2026, that framing is changing.

- AI is no longer a novelty—it’s a utility.

- Labs want systems that shape workflows in real time.

- Analytics must be contextual, not just descriptive.

- Software has to integrate data and guide decisions.

In other words, labs aren’t just looking for better data capture—they’re looking for contextual intelligence embedded into their execution platform.

Why AI matters for LIMS and lab management software

Modern labs generate diverse data: structured results, unstructured files, instrument logs, metadata, assay conditions, and operational signals. AI becomes valuable when it can work across those inputs with the correct scientific and operational context.

1) Connect the dots automatically

AI can surface patterns across experiments and conditions—but only if the platform preserves relationships between samples, workflows, and results. Without those links, AI is forced to guess context that was never captured.

2) Reduce coordination overhead

As teams scale, the hidden tax is coordination: reconciling spreadsheets, tracking handoffs, chasing approvals, and re-running work due to missing context. AI can help identify inconsistencies early and reduce manual reconciliation—if it has access to structured, connected operational data.

3) Guide decisions during execution

Instead of producing static reports after work is done, AI can provide operational insight during execution—suggesting next best actions, surfacing risks, and highlighting workflow bottlenecks in real time.

4) Support adaptive lab operations

Labs evolve constantly. Metadata changes. Protocols change. Programs multiply. AI needs a platform that can adapt without breaking historical analysis or forcing a re-implementation every time the science shifts.

What SLAS 2026 suggests about where lab software is going

Across conversations and demos, four trends stand out:

AI as a layer, not a checkbox

The most credible AI strategies treat intelligence as something built on top of a flexible data foundation—not bolted onto a rigid legacy system.

Integration over silos

Labs are moving away from disconnected point tools. The direction is unified platforms that connect samples, experiments, files, and workflows.

Context over volume

Teams aren’t overwhelmed by “too much data.” They’re overwhelmed by disconnected data. AI needs context to deliver real value.

Execution-centric design

The platform should help labs run operations effectively. Compliance and reporting matter—but they shouldn’t come at the cost of adoption and speed.

Genemod at Booth #1835: AI-powered execution, not just recordkeeping

At SLAS 2026, Genemod is exhibiting at Booth #1835 to showcase an approach aligned with these trends: a unified LIMS + ELN platform built on a connected data model that makes AI-driven analysis and automation practical.

Genemod focuses on the core problem AI is supposed to solve—but often can’t in fragmented environments: preserving context across scientific work.

- Connected data model: Samples, experiments, files, and workflows stay linked, so analysis retains the full scientific story.

- Operational visibility: Ownership, status, requests, and approvals create a real execution layer—useful for teams, not just audits.

- Flexible structure: Workflows evolve without breaking history or forcing a full reconfiguration cycle.

- AI-ready foundation: Structured, connected data enables analysis that goes beyond dashboards and static reporting.

What this enables: AI that can answer cross-cutting questions—across samples, experiments, and operations—without the lab rebuilding reports every time the science changes.

Questions to ask before adopting an “AI LIMS”

Not all AI claims are equally grounded. If you’re evaluating an AI-enabled lab platform, these questions quickly separate real capability from marketing:

- Does the platform connect samples, experiments, and files in a single data context?

- Can AI surface insights beyond summaries and dashboards?

- Will analytics stay stable as workflows and metadata evolve?

- Does the system reduce coordination overhead—or introduce more configuration burden?

- Can the platform help identify risks early (inconsistency, bottlenecks, rework), not just report outcomes?

In 2026, platforms that only interpret isolated datasets will increasingly feel like yesterday’s tools. The next generation of lab software needs to support intelligence, agility, and scale—without sacrificing adoption.

Where this goes after SLAS 2026

SLAS 2026 reinforces a bigger shift: lab software is becoming an active partner in R&D—not a passive record of completed work. When AI is built on a flexible, contextual data foundation, it enables labs to optimize execution and scale with confidence.

If you’re attending SLAS, visit Genemod at Booth #1835 to see what AI-driven lab execution looks like when the data platform is designed correctly from the start.